Machine Learning Helps Reveal What Makes Games Complex for People

Machine Learning Reveals Game Complexity

This article explores how machine learning (ML) and artificial intelligence (AI) are revolutionizing the study of human behavior, particularly in the context of strategic decision-making in economic games. Traditional methods of understanding human behavior, which are often variable and complex, are being enhanced by large-scale online experiments and sophisticated ML models.

The Challenge of Understanding Human Behavior

Human behavior is notoriously difficult to model with simple laws, unlike the predictable laws of physics. Psychologists and economists are now leveraging new toolkits, including online experiments and ML, to uncover the underlying principles of human actions. A recent paper in Nature Human Behaviour applies these tools to analyze strategic decisions in economic games.

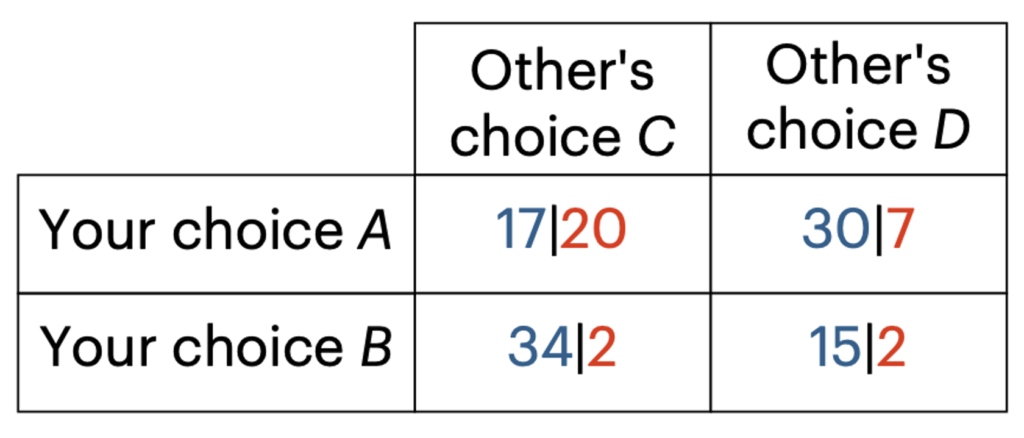

A Typical Matrix Game Example

The article illustrates its points using a typical matrix game. In this game, a row player chooses between options A and B, with payoffs indicated in blue. They are paired with a column player who chooses between C and D, with payoffs in red. The example shows a 2x2 matrix where, for instance, if the row player chooses A and the column player chooses C, the row player earns 17 points and the column player earns 20 points. Participants are compensated with real money based on their performance in randomly selected games.

A Three-Step Pipeline for Analyzing Behavior

The research outlines a three-step pipeline to uncover hidden laws behind strategic behavior:

- Scale Up Data Collection: Utilize crowdsourcing platforms to gather vast amounts of decision data from online participants playing designed games.

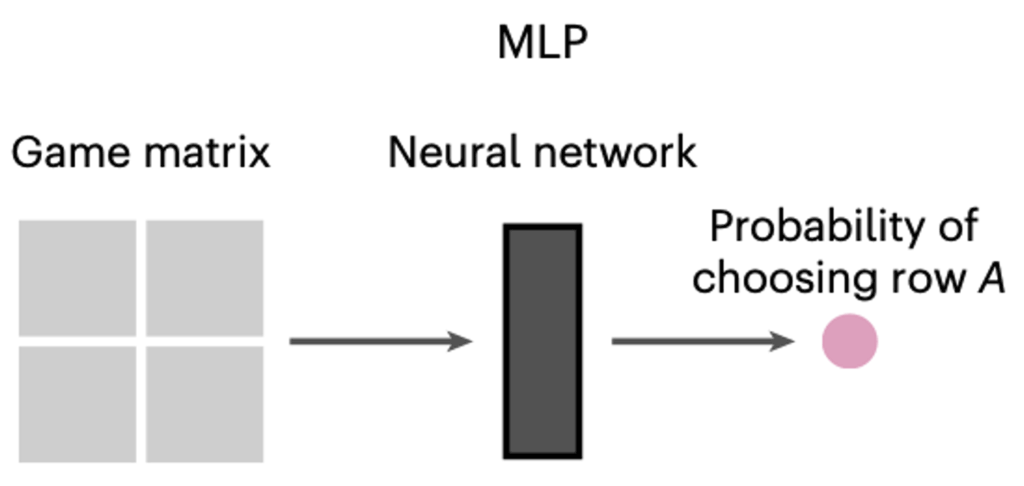

- Automated Discovery with Neural Networks: Employ neural networks to automatically discover the best mapping between game features (what the player sees) and human behavior (what the player does), capturing maximum behavioral variation.

- Interpret the Black Box: Use modern interpretability tools to understand the neural network's learned rules, identifying which game aspects contribute to perceived difficulty and which cues players rely on.

This approach allows researchers to use the trained neural network as a stand-in for the average person's mind, enabling exploration of 'what-if' scenarios without running new experiments. This helps in developing cognitive theories about why certain games are intuitive and others are challenging.

Developing Theories of Strategic Choices with Neural Networks

The study involved generating over 2,400 unique 2x2 matrix games and collecting more than 90,000 human decisions. A neural network model was built to predict these choices, explaining approximately 92% of the variance in human decisions on unseen games.

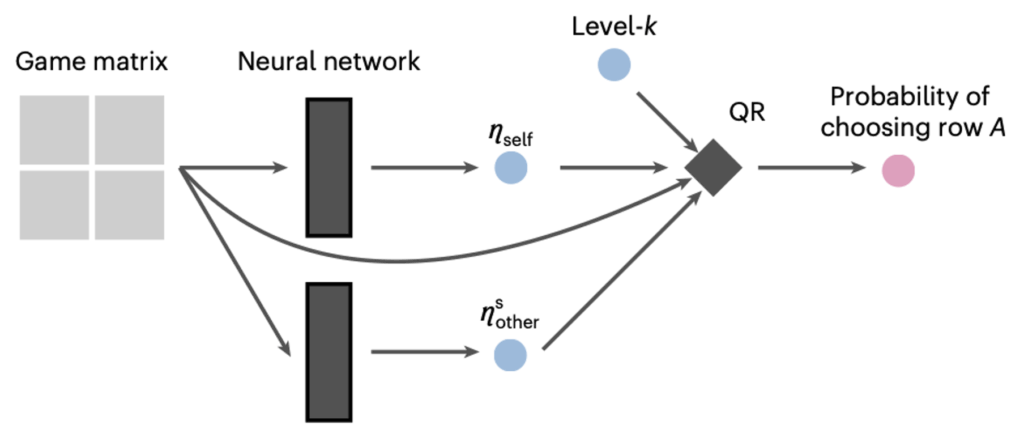

To gain clearer theoretical insights, the researchers introduced structure inspired by behavioral game theory, such as level-k thinking (limited reasoning steps) and quantal response function (randomness in decision-making). These structured models embed assumptions about human rationality into the neural network. In these models, the final prediction comes from a behavioral model (like level-2 quantal response), but its parameters are generated by a neural network that adapts to the specific game matrix.

One highly effective structured model, assuming level-2 reasoning with behavioral and belief noise, explained about 88% of the variance in human decisions, closely matching the unconstrained model.

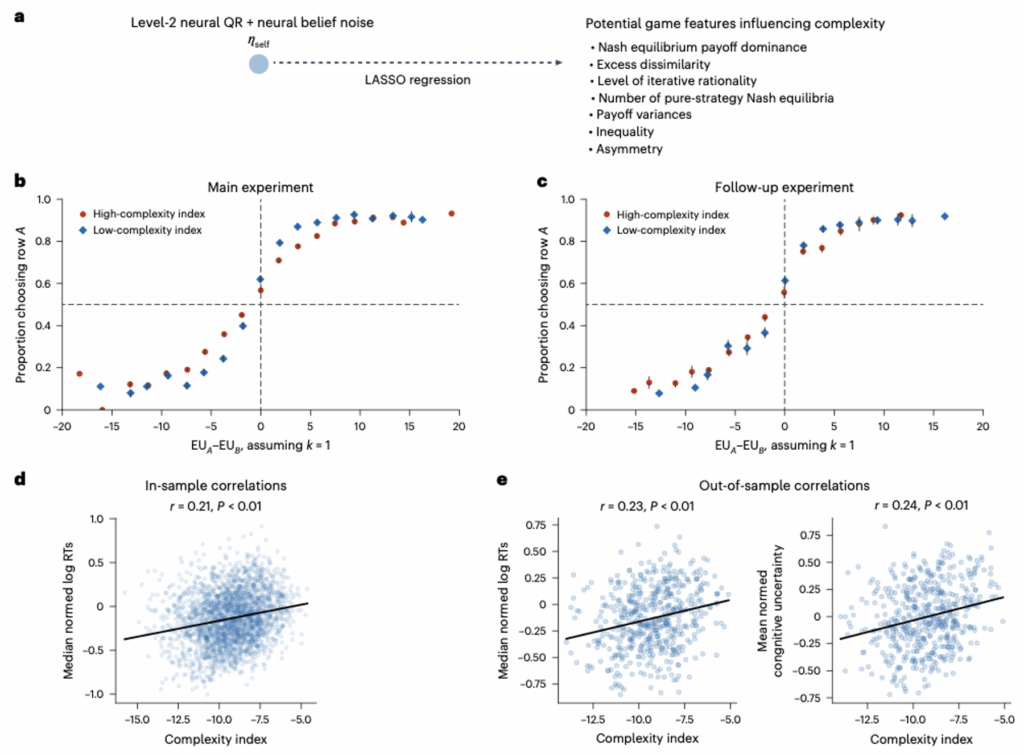

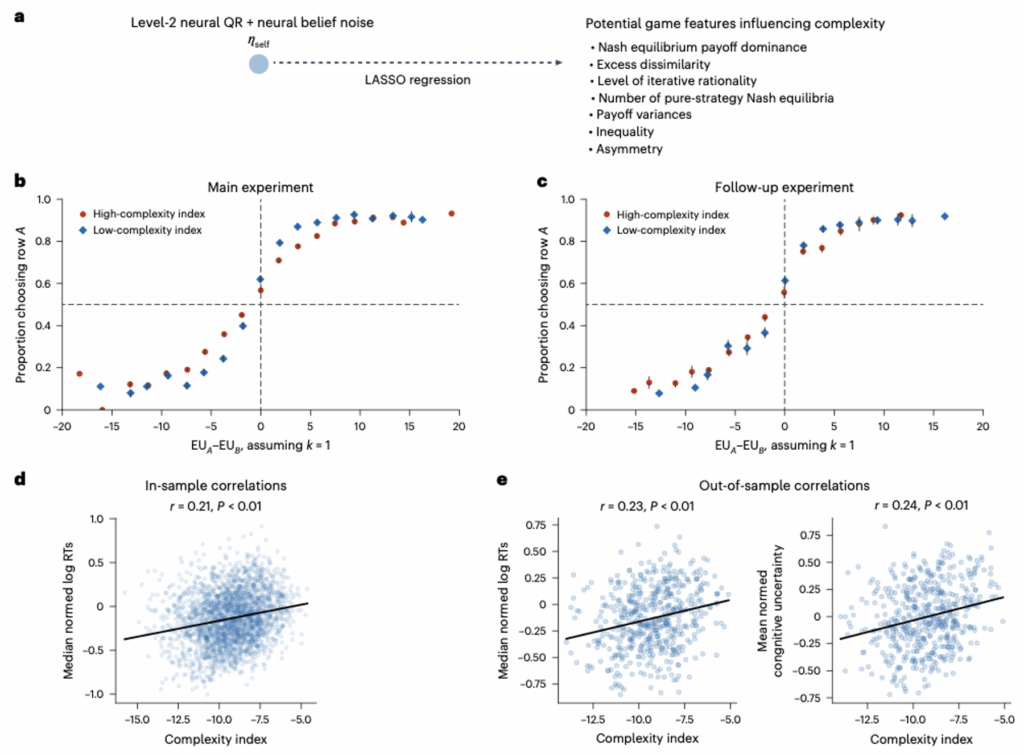

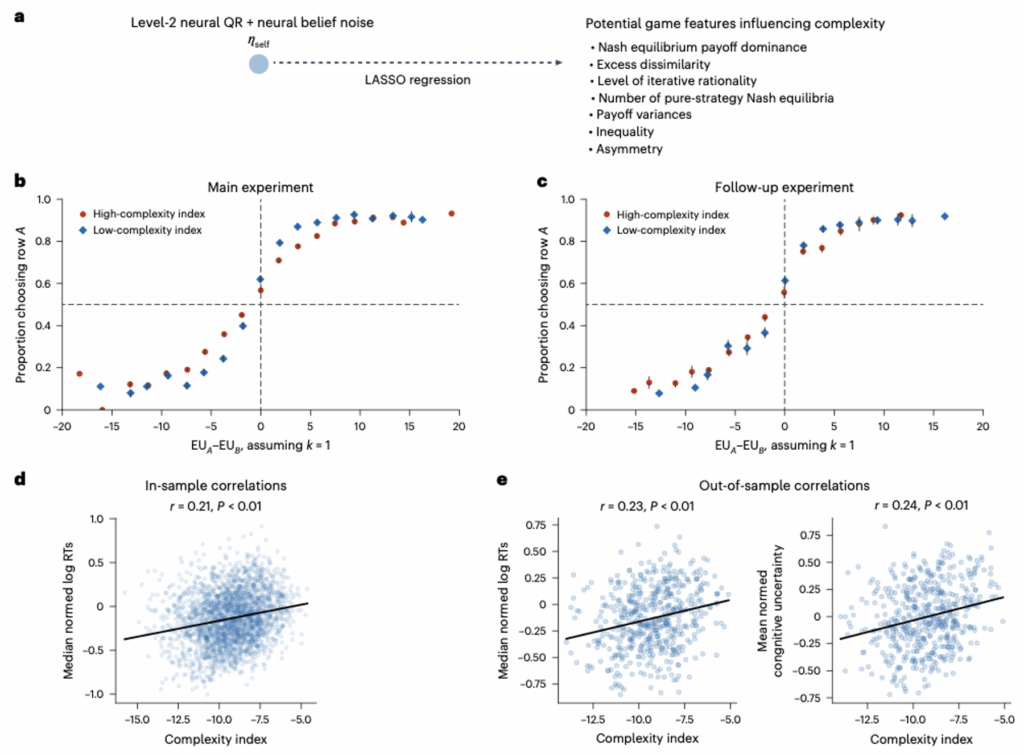

Searching for Game Complexity

The success of the structured neural network model is attributed to its ability to adjust noise terms (behavioral and belief noise) based on individual games. This context-dependent noise variation is something traditional models struggle to capture. To understand why certain games are noisier, the researchers used LASSO regression to identify key game features influencing this noise.

Three key features emerged as significant predictors of noisiness:

- Payoff Dominance: Games where the Nash equilibrium payoff clearly surpasses other outcomes are simpler.

- Arithmetic Difficulty: Games requiring complex calculations to determine expected payoff differences are more complex.

- Thinking Depth: The number of reasoning steps needed for a rational decision. More steps indicate higher complexity.

These features directly influence how complex players perceive a game, affecting the noisiness of their responses.

The Game Complexity Index

Based on these findings, a practical "Game Complexity Index" was developed. This index takes a matrix game as input and outputs a complexity rating, predicting how players will perceive it. Higher complexity ratings correlate with more random choices (closer to 50/50) and longer response times, indicating deeper thought processes.

The index also demonstrated generalizability, successfully predicting human behavior in new studies with different participants and games.

Summary

In conclusion, ML and AI are not replacing traditional research methods in economics and psychology but are significantly enhancing them. By utilizing powerful computational tools, researchers can transform large datasets of human behavior into clear, actionable insights into cognitive processes, decision-making, and strategic play.

- The research highlights the synergy between AI/ML and traditional scientific methods.

- Neural networks can uncover complex patterns in human behavior that are difficult to model otherwise.

- A "Game Complexity Index" has been developed, based on payoff dominance, arithmetic difficulty, and thinking depth, to predict how complex players find games.

- This index correlates with behavioral randomness and response times, and shows generalizability across different studies.

This work, co-authored by Jian-Qiao Zhu and Tom Griffiths, is further explored in Griffiths' forthcoming book, The Laws of Thought: The Quest for a Mathematical Theory of the Mind.

Original article available at: https://blog.ai.princeton.edu/tag/machine-learning/